Article Contents

Introduction

Today, we’re going to talk about how a government authority that handles very important exams made checking answer sheets easier by using a digital system.

This authority usually holds exams twice a year, and they have to check over 2,00,000 answer sheets in 30 to 40 days to announce the results on time.

Checking all these papers by hand was very challenging. Examiners from different parts of India had to travel to a central place to do this work. There were also problems with ensuring the quality of the checking because coordinating the checking and reviewing process was complicated.

To make things better, the authority decided to use a digital marking system from Eklavvya. This system lets examiners mark answers on a computer screen, which helps in managing the huge number of answer sheets more efficiently and improves the quality of the checking process.

In this case study, we’ll look closely at how this government body improved the way they check exam papers with the help of Eklavvya.

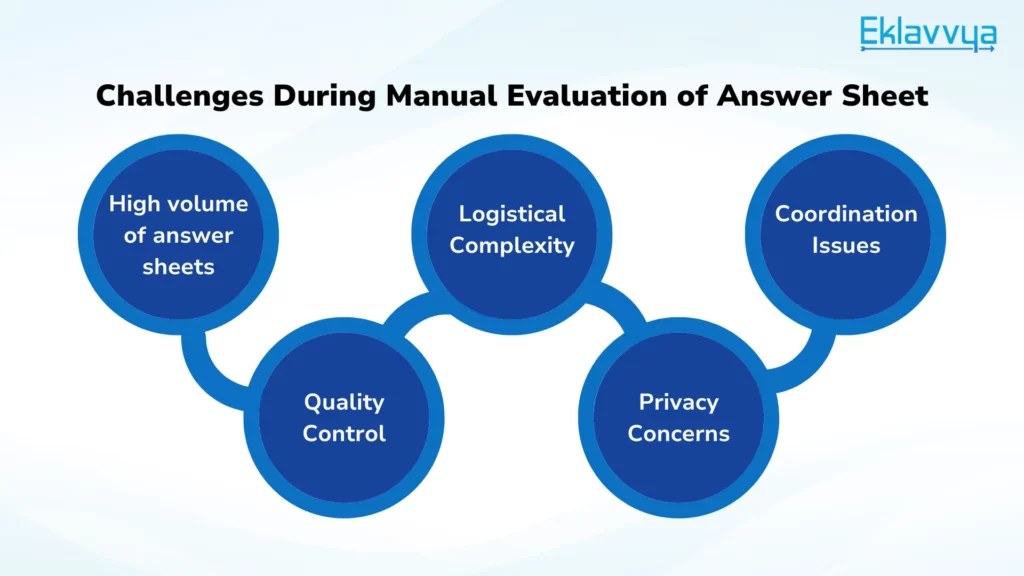

Challenges During Manual Evaluation of Answer Sheet

Before they started using a digital system for checking exam papers, the government authority faced many problems with the manual process.

The institution had the task of evaluating more than 2,00,000 answer sheets within the period of 30 to 35 days.

Checking papers by hand was difficult for several reasons, such as logistical issues, coordination problems, and concerns about the quality of the checking. These challenges not only risked the fairness of the exams but also caused delays in announcing results, which made students unhappy.

This process was expensive and complicated. Also, without a central system to monitor everything, it was hard to keep track of how the checking was going and make sure it was finished on time.

Because there was no technology used, maintaining a high standard of checking across all examiners was tough. Protecting the privacy of students was another big issue.

With papers being passed around by hand, it was possible that students’ identities could accidentally be revealed to the examiners or moderators. All these problems with coordination and handling the exam papers meant that it took a long time to get the results ready.

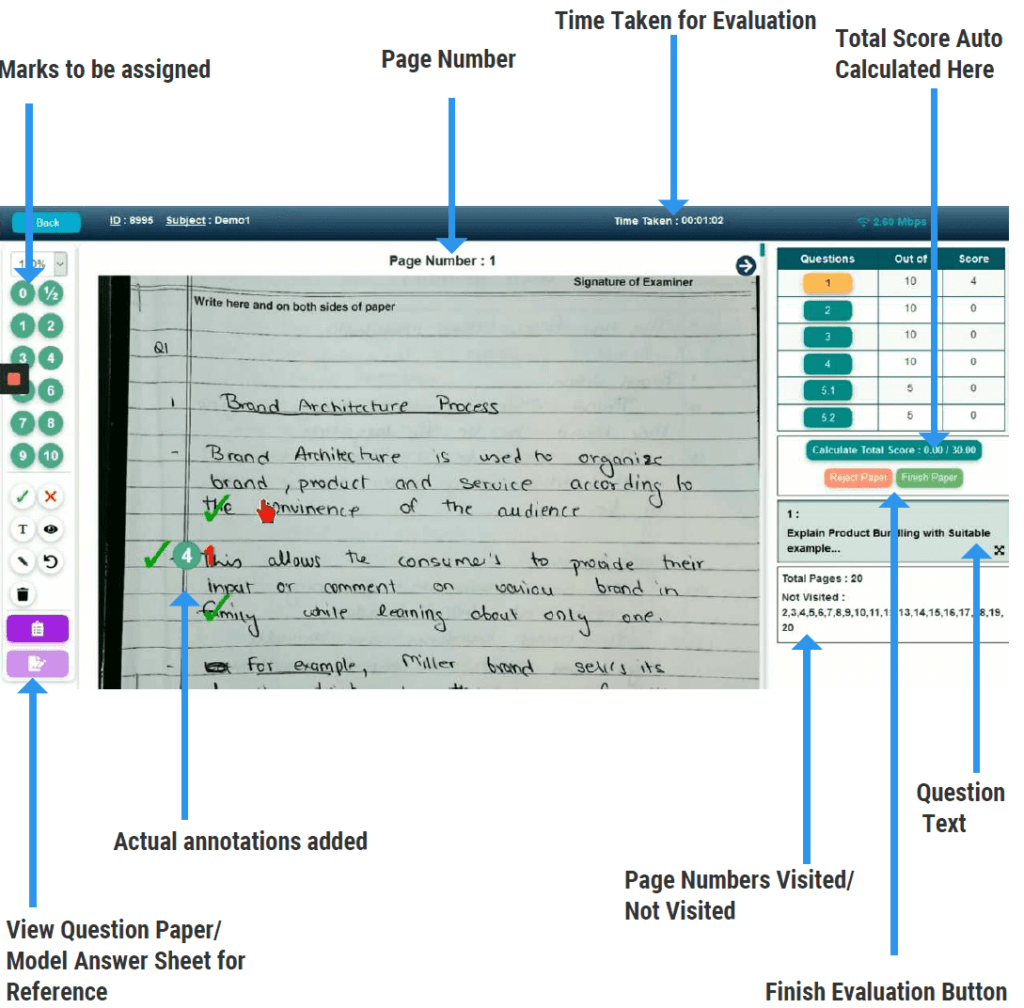

Embracing Onscreen Marking: A Solution-Focused Overview

The Eklavvya platform helped the government authority by making the exam-checking process more secure, scalable, and efficient in terms of quality. Also, it helped save a lot of money that was previously spent on the manual process. The platform made it easier to manage everything from one place with a central dashboard that tracks all activities.

Let’s look into each activity and its impact in detail.

Scanning Activity

40 users scanned over 2,00,000 answer booklets within 3 weeks. The scanning team worked in three shifts to enhance efficiency, quality, and the optimal use of scanners.

Entire physical answer sheet copies were scanned and converted into digital format. All the scanned answer sheets were stored in a secure cloud environment with access control and security.

The entire hassle of physical handling of answer sheets for various processes, including evaluation, moderation, re-moderation, result processing, and student photocopy requests is eliminated.

Training for Examiner

The success of any system greatly depends on the training and support given to the people using it. For the on-screen marking system, the government body in charge made sure that examiners received thorough training.

This was done through practical exercises like grading practice exams and watching instructional videos. They also held online webinars to teach examiners about the system, how to use it effectively, and the best practices for grading.

To make sure examiners could get help when they needed it, a dedicated help desk was set up to answer their questions.

This support system boosted the examiners’ confidence and made them more familiar with how the on-screen marking system worked. By practising with mock exams, they got a hands-on understanding of the system’s functionality.

The centralized webinars and training videos were particularly useful, helping the examiners grasp the system quickly and effectively. With this solid foundation of knowledge and support, the examiners were able to use the system confidently, which is crucial for the successful adoption of new technology in educational settings.

This approach ensured that examiners could efficiently and accurately evaluate student work using the new system.

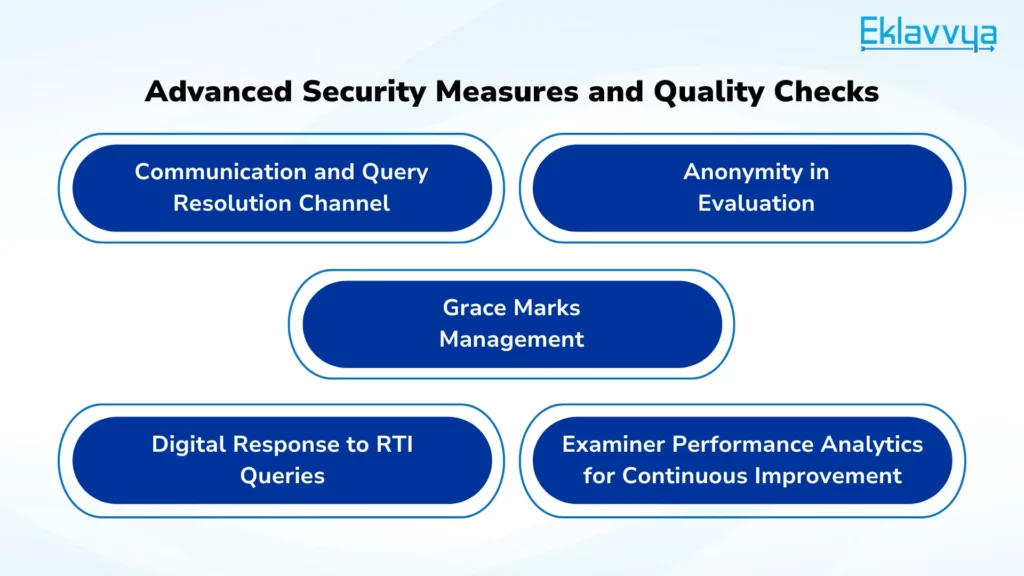

Advanced Security Measures and Quality Checks

The use of an on-screen marking system with strong security features helped the institution increase both the integrity and fairness of the evaluation process. Let’s break down the main points for a clearer understanding.

Two-Factor Authentication for System Access

The two-factor authentication system is a key feature. This system requires users to enter a unique code along with their usual username and password when logging in.

This unique code is generated each time a user tries to log in and is sent to an Android app on their mobile phone. This ensures that only authorized people can access the digital answer sheets, enhancing security.

Two-Step Verification for Quality

There’s a two-step verification process for checking the quality of the answer sheet evaluations. After examiners review the answers, some of these are randomly checked again by higher authorities.

This step ensures that the evaluations are consistent and high-quality, and it allows for immediate feedback if there are any issues.

Communication and Query Resolution Channel

There’s a dedicated communication channel for examiners, moderators, and the head examiner. This system works like a ticketing system where queries from the head examiner appear as tickets to be resolved by the examiners or moderators.

This setup greatly improves coordination, speeds up resolving issues, and enhances the feedback process among all involved in the examination process.

Anonymity in Evaluation

One key aspect of checking exam papers is making sure that the personal information of students or invigilators is kept private from those evaluating the exams.

The on-screen marking system keeps this information anonymous during the evaluation. This means that details like the student’s name, roll number, subject, email address, or any personal information linked to the student or invigilator are hidden.

Examiners looking at the answer sheets won’t know the identity of the students or the people whose papers they are grading. By adding this layer of anonymity, the on-screen marking system makes the whole evaluation process much more secure.

Grace Marks Management

In the process of evaluating exams, many institutions add extra marks, known as grace marks, to a student’s total score. This practice is common and varies based on different subjects and the student’s performance.

The high-stakes exams we’re discussing also follow this method, with specific rules for when and how grace marks are added for different subjects and performances. The on-screen marking system offers a feature that allows these institutions to set up these grace mark rules in advance.

This means that the system can automatically add grace marks to a student’s total score based on the predefined criteria. This automation makes calculating final results much easier and more efficient for the institution. As a result, it helps them announce exam results on time.

Digital Response to RTI Queries

When it comes to announcing results for important exams, dealing with requests for information, especially under the Right to Information Act (RTI), is a crucial step. This act allows students to ask for photocopies of their graded exam papers. They can make these requests by filing an RTI application.

In response to these requests, students were able to get digital copies of their graded answer sheets quickly, thanks to the use of modern technology. This was made possible by connecting the on-screen marking system with an advanced Application Programming Interface (API).

This connection meant that the High-Stakes Examination Authority could easily give students photocopies of their graded answer sheets online.

This integration of the on-screen marking system’s API simplified the process of issuing these photocopies to students. As a result, the entire procedure became more transparent and efficient. Students could immediately see their graded answer sheets online as soon as they filed their RTI request.

This instant access helped make the process smoother for students, giving them quick insights into how their exams were graded and ensuring the transparency of the examination process.

Examiner Performance Analytics for Continuous Improvement

The on-screen marking system offered many benefits and insights for educational institutions. It made it easier to understand how a specific group of students performed. When examiners graded tests, the system would calculate the average score of that group, as well as how they did on each question and in different sections of the test.

It also looked into how long it took each teacher to grade, what the average grading time was across different examiners, and provided a detailed analysis of the scores. This included things like how many students scored above 80%, how many fell into the 40-80% range, and how many scored below 30%.

Furthermore, this system gave out reports on score variations, average scores, and even detailed graphs showing the distribution of scores.

These reports and analyses were generated efficiently and helped institutions better understand not only how students were doing in specific subjects but also how evaluators were performing in terms of grading time.

This was a great tool for improving the evaluation process, both for assessing student performance and for evaluating how exams were graded. The system delivered detailed analytics on faculty performance by assessing variance and calculating the average marks issued by each faculty member.

These insights allow for comprehensive comparisons. Should a particular evaluator’s performance deviate significantly from the average mark variance, it prompts a quality review or suggests reallocation to another faculty for reassessment

Implementation of onscreen marking has provided a pathway for the institution to get important data analytics for future reference related to defining question paper difficulty, and examiner performance ratings.

Transformative Outcomes

The entire process is managed effortlessly without any hassle, streamlining operations and ensuring smooth execution at every step.

The institution witnessed substantial cost savings in the evaluation of answer sheets. Maintenance of digital copies proved to be cost-effective, eliminating the need for storing vast physical piles of answer sheets.

The logistical costs associated with examiner and moderator travel were eliminated, further reducing overhead and simplifying the logistical complexities traditionally involved in exam evaluation.

Examiner evaluation speed increased by 30 to 40%.

The accuracy of evaluations improved by 45% when compared to the traditional, physical evaluation methods.

The cost of the evaluation activity decreased by an impressive 63%, underscoring the financial efficiency of the onscreen marking system over the conventional evaluation process.

A New Benchmark in Examination Evaluation

The successful implementation of onscreen marking by the Central Government Exam Authority is not just a story of overcoming challenges; it’s a testament to the power of innovation in redefining the landscape of exam evaluations.

By setting new standards in security, fairness, and efficiency, onscreen marking has paved the way for a future evaluation process of any high-stakes examination activity.

About Eklavvya Onscreen Marking System

Eklavvya Onscreen marking platform has been successfully adopted by leading education institutions, examination authorities and leading universities to simplify the answer sheet evaluation process.

The Eklavvya platform has been used to conduct more than 50 million exams and evaluations across multiple customers with success. Register for a demo to understand the Best Practices of Digital Answer Sheet Evaluation.

![How Onscreen Marking Revolutionized Central Govt Exams [Case Study]](https://www.eklavvya.com/blog/wp-content/uploads/2024/04/How-Onscreen-Marking-Revolutionized-Central-Govt-Exams-Case-Study-1.webp)

![How Government-Led Exams at 250+ Locations Are Setting New Standards of Integrity [Case Study]](https://www.eklavvya.com/blog/wp-content/uploads/2024/04/Enhancing-Exam-Integrity-Government-Certification-in-250-Locations-150x150.webp)

![Transforming Central Govt. Exams Evaluation: How Onscreen Marking is Leading the Charge [Case Study]](https://www.eklavvya.com/blog/wp-content/uploads/2024/04/How-Onscreen-Marking-Revolutionized-Central-Govt-Exams-Case-Study-1-150x150.webp)

![How Onscreen Marking Revolutionized Central Govt Exams [Case Study]](https://www.eklavvya.com/blog/wp-content/uploads/2024/04/How-Onscreen-Marking-Revolutionized-Central-Govt-Exams-Case-Study-1-300x300.webp)